It’s becoming easier to use machine learning (ML) in your mobile apps, especially with technologies like TensorFlow and CloudVision. But training machine learning models can take significant time and effort, that’s where Google’s Firebase ML Kit can help! Even if you have no machine learning experience, you can use any of the pre-trained models to get a head start. Common use cases include face detection, recognizing text, labeling images, and scanning barcodes. Let’s take a closer look at how the Face Detection API works.

Face Detection

The ML Kit’s Face Detection API allows you to detect faces and their expressions in videos, photos, or real time applications such as a live streams or video chat. But it’s not facial recognition, meaning it could not tell you the identity of a person.

ML Kit’s Face Detection API

Each face detected is given a tracking ID along with the following information:

| Facial Landmarks | Feature Probabilities | Facial Contours |

| – Left eye – Right Eye – Bottom of mouth | – Smiling – Left Eye open – Right Eye open | – Nose bridge – Left eye shape – Top of upper lip |

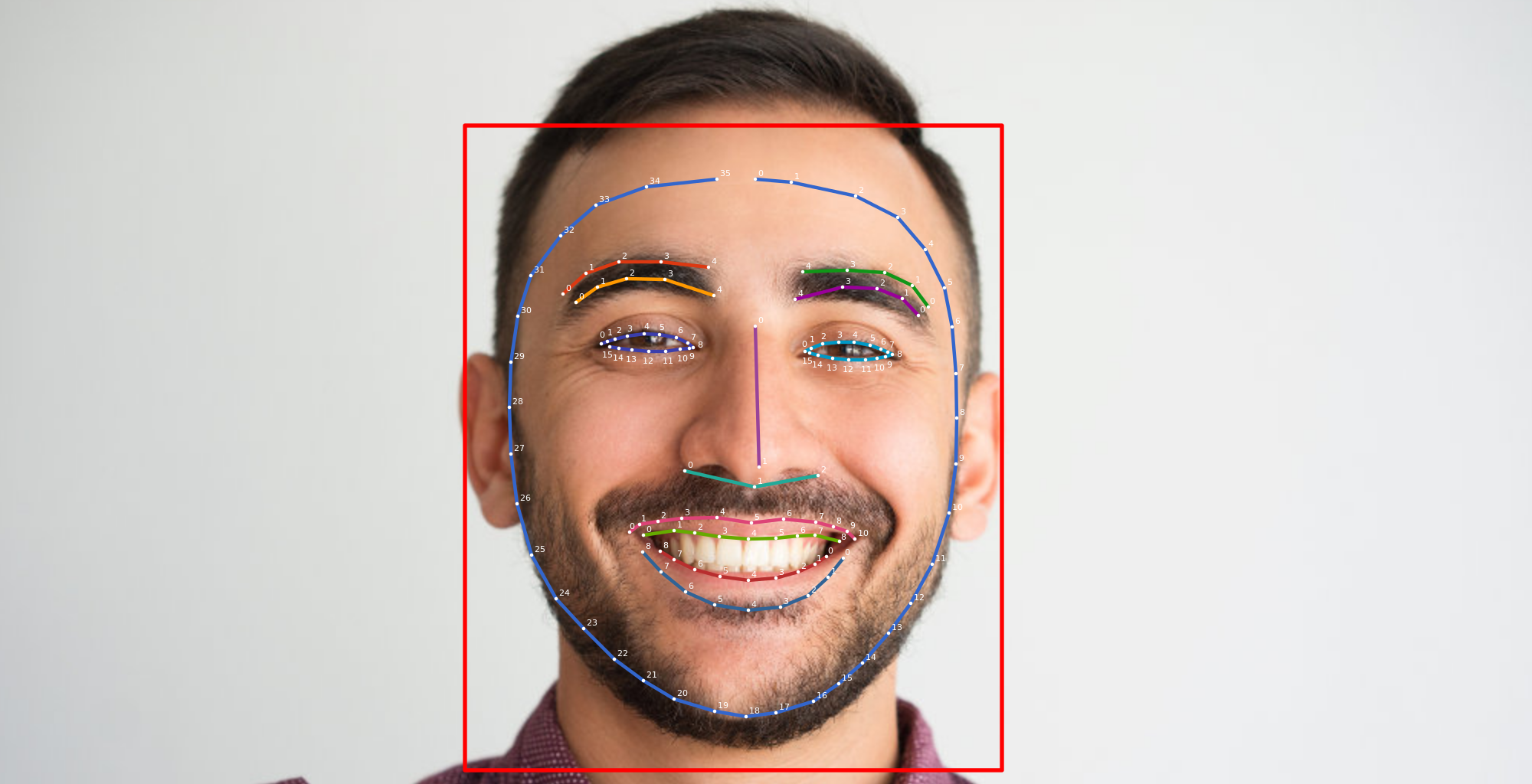

- Facial Landmarks: Coordinates for each facial feature detected.

- Feature Probability: A value between 0.0 and 1.0, where a 0.7 in “smiling” would mean they are most likely smiling.

- Facial Contours: A map of coordinates for the detected face, helpful if you need to add snapchat style filters. This is turned off by default.

For more detailed information, visit the ML Kit for Firebase docs.